W. Francis Mizell

GPS and GIS: The Untold Nightmare.

ABSTRACT:

The GPS technology is not as easy as it may appear.

There are many areas where errors can occur that will invalidate the positional

accuracy of the GPS information. These areas include equipment configurations,

software configuration, collection techniques, and data processing techniques.

Possible equipment configuration errors can occur in the following list

of TDC1 configuration options: PDOP mask, SNR mask, elevation masks, position

mode, input mode, log DOP data, warning time, minimum positions, and constant

offset. Possible software configuration errors can occur in the following

list of options: format, position filter, coordinate system, units, and

attributes. Collection technique errors usually confine themselves to offsets,

and multipath errors. Possible ways of avoiding these errors include the

creation of software and equipment configuration checklists; extensive

training and certification; automating the data processing; adding security

to the system; allowing for visual verification of the final GPS data;

and finally, allowing for a single database manager to oversee the data

collection process. Following these alternatives will reduce the amount

of bad GPS data from entering a database.

INTRODUCTION:

It was a nightmare! You had to throw the whole dinner

out! Your in-laws were due any moment, and dinner tasted like old stamps

with a hint of fish. That shrimp tortellini you tried at the company dinner

was fantastic. You got the recipe from your friend Joanne, and decided

to impress your in-laws with the dish, adhering to exact directions. But

for some reason, the taste was different. Your wife tasted a sample, and

readily agreed as she gently suggested that perhaps eating out would be

best. Well, there went making a good impression.

Perhaps the shrimp was old, or maybe the flame

was too hot. Maybe it was the difference between the pinch and the sconce

of basil. Or perhaps you allowed the butter to separate. In any event,

the dish did not taste right. You found Joanne the next day. She confessed

that the recipe took time for her to perfect, but she promised that given

time, your shrimp tortellini would be just as mouth watering as hers.

I know it sounds odd, but shrimp tortellini and the

Global Positioning System (GPS) have much in common. The perception is

that anyone can make shrimp tortellini given a recipe. How hard is it to

follow directions. The same perception is that anyone can use GPS if given

proper directions. While these statements may be true in the long run,

the simple fact is that without training and practice, you may have to

throw out your meal ? and your data.

There is a knack, or a sense of cohesion and comprehension

that comes with proper training, practice, and long term exposure to a

task, a piece of equipment, or a software package. Learning the difference

between the taste of basil and thyme, or knowing how to minimize the risk

of multipath effects all come from learning, practice, and exposure. This

understanding helps you avoid pitfalls, which are prevalent in any task,

bringing me to the focus of this paper. Under the management of a GIS and

without going into great detail, I hope to make the reader aware of the

subtleties of GPS technology, to suggest methods of avoiding these subtle

pitfalls, and to champion the idea that GPS is not easy.

GPS THEORY:

Not to become entrenched in details, this section will

give a brief overview of GPS theory. GPS or Global Positioning System is

a technology, which uses a series of Department of Defense (DOD) satellites

called a constellation in a non-geosynchronus orbit around the plant. The

satellites are constantly being updated with position information on every

satellite in the constellation. Therefore, each satellite has a record

of all the other satellite locations. This data is stored in an almanac.

Also, each satellite has an atomic clock, which is controlled by the DOD

with daily updates and encryption in a process called dithering. This information

is being constantly transmitted from the satellites.

The GPS receivers like Trimble's PRO XL unit pick

up these signals. Using the information embedded in the signal, the GPS

receiver selects the best four satellites that it will use for the trilateration

calculation to derive the final solution. The simplified version of the

trilateration calculation for each measurement requires the input of four

variables: the x value, the y value, the z value, and time. In order to

solve four unknowns or variables in mathematics, you need to have four

equations; hence, you need to have four satellites.

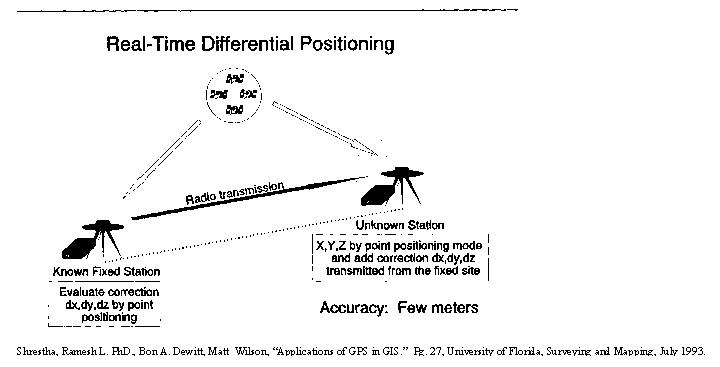

However, as mentioned earlier, the time variable

is encrypted. The encryption has the effect of time distortion, which in

turn distorts the GPS locations by as much as 400 meters (Figure 1).

FIGURE 1.

There are military GPS units available to Federal

agencies, which decode the time variable; however, the positional accuracy

for these units is only around 15 meters. To get the 1-3 meter accuracy

or sub-meter accuracy, differential correction is needed. Differential

correction requires two sources of data. One source is the raw uncorrected

GPS data captured by the GPS rover receiver (unit in the field), and the

second source is the corrected GPS information from a base station .

A base station is a stationary GPS receiver

where the position of the antenna has been surveyed to sub-centimeter accuracy.

Since the base station already has an accurate location, the raw uncorrected

data is corrected for any variances by simply subtracting the raw location

by the known location of the base station. This process is completed on

a second by second basis. The differentially corrected positions are then

stored in flat files, broadcast via radio waves, or both.

Basically, the base station data offers a correction

vector (i.e. distance and angle) which can then be applied to the raw data

collected by the GPS rover either on a real-time or post-processed basis.

Each Raw measurement is then corrected using the correction vector, which

is applicable to the exact time the raw data was collected. After all the

measurements have been corrected, the measurements are then averaged to

supply a single solution: the location or point (Figure 2).

While this description is very basic, I encourage

GPS users and users of GPS data to research the topic in greater detail.

The more you understand the technology, the better equipped you are to

understand and deal with data problems caused by the subtle pitfalls of

GPS.

FIGURE 2.

GPS PITFALLS:

The greatest difficulty with GPS is that most people

perceive solutions derived from the equipment as miracles. And being miracles,

the solutions must be precise, indisputable, infallible, and incorruptible.

Unfortunately, the solutions can be dead wrong. An even bigger problem

is that the 'dead wrong' solutions find their way into massive databases

hiding among correct data like viruses. Knowing where errors can occur

within GPS data collection processes can help minimize the amount of locational

error. I would first mention that this paper is not meant as an exhaustive

disclosure of all possible GPS problems, but rather, is meant to enlighten

the users of GPS data to some of its possible faults. Having stated the

purpose, within GPS data collection, there are basically three major areas

in which errors may occur. These areas include equipment configuration,

collection procedures, and data processing.

Equipment Configuration:

Proper equipment configuration is vital to insure accurate

data collection, for the equipment settings create the environment, which

determines how and what data will be collected. For the purposes of this

paper, I will refer to Trimble Navigation's Pro XL unit with a TDC1 datalogger

and beacon receiver for real-time differential correction. Dependent on

the equipment manufacturer and model, the item names to which I refer may

differ; however, most professional brands of GPS equipment have similar

options. Basically the following three devices make up the Trimble Pro

XL unit: TDC1 datalogger, beacon receiver, and GPS receiver. However, short

of software or hardware problems, the hazard area resides in the configuration

of the TDC1 datalogger.

TDC1 Configuration for Satellite Information:

The datalogger has a number of configuration settings,

which are crucial to obtaining proper data. Errors from faulty configuration

settings can cause positional errors in excess of 100 meters. The first

group of settings deals with how the unit will handle the incoming satellite

signals. These settings include PDOP mask, SNR mask, elevation mask, position

mode, and log DOP data.

PDOP Mask:

PDOP is an acronym for 'position dilution of precision',

which is a fancy name for a measurement of the statistical goodness of

the satellite configuration. The satellite configuration is important in

that it determines the quality of ground truth one can obtain. A good satellite

configuration will yield a low PDOP, and a bad configuration will yield

a higher PDOP.

The PDOP mask setting is a filter which will turn

off the data collection process if the PDOP rises above the set mask. To

assure a 95% confidence in reaching a 1 to 3 meter criteria, the Trimble

suggests a PDOP mask setting of no greater than 4.0. However, practical

experience shows that such a setting can require a lot of field time waiting

for the satellites to reach optimum configuration. A more realistic setting

is a PDOP of 6.0. Note that the PDOP mask should be set no higher that

6.0. The error caused by increasing your PDOP is not linear, rather exponential.

Be wary.

Unfortunately, the PDOP mask is an easy target for

the impatient GPS'er, let us call him FRED. The enticement to 'bump up'

the PDOP mask to 6.2 or 7.5 or even 8 is great when the mosquitoes are

biting, the heat is intense, and a cool air conditioned car waits.

SNR Mask:

The signal to noise ratio (SNR) is a comparison of the

strength of the incoming satellite signal to the inherent noise generated

by the atmosphere, obstructions, and other interference. If the satellite

signal is greater than the noise by the expressed ratio, the GPS unit will

continue to accept data. When the noise is greater than the satellite signal

data is not accepted. Therefore, the higher the SNR the better. Typically

the SNR mask is set to 6. Decreasing the SNR mask may introduce time coefficient

errors, which will degrade your locational accuracy considerably. Fortunately,

FRED rarely changes the SNR mask, because few problems arise with satellite

signal strength to warrant his attention. However, it is good practice

to check to make sure the setting is set to 6 or greater each time the

unit is used.

Elevation Mask:

The elevation mask is a filter, which will disallow

any signal from a satellite, which is below a certain described angle as

registered in the satellite's ephemeris. Basically, if the satellite is

below the suggested 15° above the horizon, the GPS unit will not use

this satellite for the determination of the final solution. The elevation

mask is set to 15° to ward off atmospheric distortion and possible

multipath errors due to structural interference. High multipath effects

can degrade the time coefficient used to determine the solution and lessen

the positional accuracy of the data.

Another benefit to the 15° setting is that you

increase your chances of using the same satellites that the base station

is using. The base stations are set to 10° in order to see the most

possible satellites (base stations are usually on a very high tower which

act as a shift in the horizon line). Because the base station is seeing

more satellites than you may be seeing, your chances of using the same

satellites increase.

This easy option is a good candidate for a FRED attack.

By lowering the elevation mask, FRED can receive signals from satellites

which before he could not read. These satellites may indeed lower the PDOP

which will enable him to collect data and avoid PDOP filters. There is

currently no filter to catch position mask changes. However, there are

times in which this setting should be changed. For instance, if you are

at the extreme edges of the base station's coverage, this angle should

be increased to limit the amount of satellites which you can use. The amount

of overlap caused by the curvature of the earth limits the duplicate use

of the same satellites by both the base station and the rover. In order

to correct the positions, both the base station and the rover must be using

the same satellites. This principle applies to real-time and post processing

differential correction.

Position Mode:

Position mode determines what type of satellite configuration

the GPS unit will use to calculate the location. There are four selections

possible with the Pro XL unit: Manual 2D, Auto 2D/3D, Manual 3D, and Overdetermined

3D.

Manual 2D mode should never be used for regular GPS

needs. This selection uses only 3 satellites to calculate the location

instead of the required 4. This selection requires that the user add a

constant altitude coefficient instead of allowing the satellites to determine

the altitude. Like time, altitude is one of the four coefficients used

to calculate location. This selection may work while on an ocean or flat

plain, but if there is any variance in elevation, the GPS unit will not

compensate. Without an accurate altitude, the locational accuracy is suspect.

Unfortunately, FRED loves to use this option to cut

corners. For example, there are times when you must wait in order to get

the four needed satellites to continue collecting data, but if FRED selects

Manual 2D, he can collect points without having to wait on the satellites.

Another outcome of selecting Manual 2D is that FRED can change the PDOP

equation. Instead of looking at four satellites, the equation is now using

three. In most cases the PDOP will fall below the required level and allow

the FRED to continue collecting data.

Auto 2D/3D mode should never be used for regular

GPS needs. This option will use the Manual 3D mode until the PDOP rises

above the PDOP switch. After it has risen above the PDOP switch setting,

the unit will revert to Manual 2D, and all the problems with Manual 2D

will apply.

Manual 3D mode is used most often. This mode uses

4 or more satellites to calculate the location. With all other required

settings correct, this mode will result in a 95% confidence that your position

will fall within 1 - 3 meters.

Overdetermined 3D mode is the best possible solution.

This mode forces the GPS unit to collect data only when there are 5 valid

satellites to use. Any less and the unit will not collect data. However,

this has been seen by most as overkill. With Manual 3D, the unit can use

more than four satellites if it can; however, the likelihood of having

four satellites available is much better than having five satellites available.

Therefore, Manual 3D is the preferred mode.

Log DOP Data:

Log DOP data option allows for the collection of DOP

data for each feature collected. Using this data, the data manager can

quickly screen out problem data. While not using this option does not directly

effect the accuracy of your final solution, it does open the way for FRED

to slip data with higher PDOP criteria into a database unchecked.

Some see the log as being a waste of space; however,

given the value to the database manager as a QA/QC control, this item is

invaluable making the space inconsequential.

TDC1 Configuration for Beacon Information:

Other configuration settings which are critical to obtaining

proper data, deal with how the unit will handle the incoming beacon signals.

This signal uses a format developed by the Radio Technical Commission for

Maritime Services Special Committee 104 called the Radio Technical Commission,

Marine SC-104 (RTCM) format. These settings include RTCM Input Mode and

Warning Time.

Input Mode:

The input mode option offers the GPS'er options of ON,

OFF, or AUTO. The ON option will set up the GPS unit for real-time differential

correction. If there is no beacon (RTCM) signal present, collection will

cease until the RTCM signal can be regained. The OFF option sets the GPS

unit for post processing. Even if the RTCM signal is present, the equipment

will ignore the signal and collect raw data. Finally, the AUTO option allows

the unit to real-time differentially correct when it is receiving an RTCM

signal, and will continue to collect raw data when the RTCM cannot be received.

The problem with this option comes into play if you

only want to have real-time differentially corrected data. Getting a RTCM

lock can often be tedious and aggravating. If you do not know the tricks

(and sometimes even if you do know the tricks), there are times when you

cannot get a RTCM lock and you have to collect raw data. FRED will often

collect raw data without even trying any tricks.

If you know that the data collected is raw data,

this is not necessarily a problem unless you do not have access to base

station data. If you do not have base station data, then the information

cannot be corrected, and could be off by as much as 100 meters.

Just from experience, however, post processing is

not something that you would want to do everyday or ever. It is time consuming

work. I personally avoid it like the plague. My rule of thumb is that if

I cannot get a real-time corrected signal, the very last option in my arsenal

is to post process.

Warning Time:

The warning time option basically allows the GPS'er

to continue collecting data for a specified number of seconds (5, 10, 20,

50, and 100) even though the RTCM signal has been lost. The way this option

works is that after the RTCM signal is lost, the GPS unit continues to

collect the data for the allotted time, using the last correction vector

received for all subsequent measurements. Given the uncontrollable nature

of the raw measurements, a high enough setting could skew the averaged

solution by as much as 100 meters.

In practice, however, warning time can be a useful

tool to avoid post processing. Given the ability to edit points outside

a few standard deviations, this option can be a blessing. For example,

if you were unable to get a RTCM lock at the feature site due to high voltage

power lines, but you could get a lock 40 feet away, you could use the technique

'coming in hot'. This technique starts where RTCM lock is established.

The warning time is bumped up to the setting necessary to get the required

amount of measurements. While receiving an RTCM signal and having data

collection paused, the GPS'er must hurry to the feature site and resume

the collection process. However, using this option requires special care.

The data must be monitored for outlyers, which may skew the location.

Of course this option also introduces the possibility

that FRED will abuse it. If you used 100 seconds to correct raw data with

one correction vector, you might as well just take raw data. But Fred would

slip this data into your database easily, because this data is labeled

as real-time corrected, and there are no filters to catch such errors.

Other Important TDC1 Configurations:

Still other TDC1 configuration options that should be

considered important for obtaining proper data are concerned with the raw

GPS data file (.SSF). These settings include Minimum Positions and Constant

Offset.

Minimum Positions:

This minimum position option allows the GPS'er to set

the minimum number of measurements that are necessary before the GPS unit

will allow data collection to stop. This option does not stop the data

collection, but merely 'beeps' at the GPS'er informing them that they can

stop taking measurements and go to the next feature.

One possible problem is that FRED can enter any number

that he desires. Essentially, Fred could get one measurement for each feature.

However, in order to reach the desired 95% confidence of 1-3 meter level,

the minimum that Trimble suggests is 180 measurements. Fortunately, practical

experience has shown that as little as 20 measurements are needed.

One measurement can also be taken in extreme cases.

This one measurement is better than offsetting, increasing the warning

time, bumping up the PDOP, changing the input mode, or post processing

the data. At least you will have one good measurement. I am not implying

that real-time correction is better than post-processed data. For with

the advent of phased processing, post processing is much more accurate

than real-time correction. However, the time involved in post processing

must be taken into consideration. For small projects, perhaps post processing

is the best option, but for large projects with massive amounts of information,

real-time correction is the preferred option.

Constant Offset:

The constant offset option allows for the capture of

data that is unreachable. For example, a highway centerline is not a good

place to go GPS'ing, and a prudent move would be to set a constant offset

to the centerline of highway, walking the edge of the road.

However, this option is not in the regular stream

of options and can be easily overlooked if a new GPS'er grabs a unit previously

set for constant offset. If the data is caught in time, then this is really

no big deal. The data can be cleaned. But if the data is processed into

a database without the knowledge that there was an offset applied, then

the data in the database is incorrect. Unfortunately, no one will ever

know, and all the GPS data will be assumed accurate to 1-3 meters.

Fortunately, with offsets, the actual offset settings

are stored in a separate area away from the actual data. The offset must

be applied within the software before it is part of the final solution.

In most cases, however, the default is to allow the application of offsets.

This is why constant offset settings option can be a problem.

If all these TDC1 options are correctly set, then

the risk of locational error is minimized. You can say with 95% confidence

that the solutions will be within 1-3 meters. But there is no fix for FRED.

If FRED is on your team, you will have a hard time collecting good data.

And you can take your 95% confidence and throw it out the window.

COLLECTION PROCEDURES:

Collection procedures are just as important as the configuration

settings, for used incorrectly, they can degrade the quality of the data.

While the degradation may not ever reach the 100 meter range, collection

procedures are impossible to monitor or filter. Once FRED has made his

mistakes the damage is done, and short of torture, you may never know how

badly. I have already alluded to some possible problems in the previous

section, and in this section, I hope to shed light on other 'tricks of

the trade', which can either be a blessing or a curse. These areas include

offsets and multipath effects.

Offsets:

An offset allows the GPS'er to derive a solution away

from the feature, and then apply a distance, angle, and inclination variable

to the solution that shifts the location of the solution close to that

of the feature. There are valid uses for an offset. For example, a water

well inside a pump house, or perhaps a rottweiler fenced around the telephone

pole you need to GPS. Of course, you could jump on the roof of the pump

house, or get the owner of the dog to hold him, but practical experience

says that it is time to make an offset.

To make a correct offset, however, the GPS'er should

take no less than 2 offsets for each feature. The 2 offset readings should

be at different angles to the feature. For each offset, the GPS'er must

measure the distance, angle, and inclination from the solution to the feature.

This can be time consuming. Moreover, the further the GPS'er is from the

feature, the greater the possible angle error. Due to the difficulty

of offsets, this type of problem invites FRED's creativity to bloom.

The first thing FRED will do is to gather the GPS

data away from the physical feature and not apply the offset at all. If

FRED is within the 1-3 meter circumference, there is not significant problem,

but the problem for you is that YOU WILL NEVER KNOW!

However, FRED could do the offsets. But knowing FRED,

he paced off the distance as ten paces, calculated his pace at approximately

3 feet, and entered 30 ft. Fred did not even use his inclinometer, but

rather looked at the sun and guessed the angle and inclination. Despite

FRED and his influence, if used correctly, however, offsets are a useful

tool. You will never reach the 1-3 meter accuracy of normal data collection,

but sometimes you have to take what you can get.

MultiPath Effects:

Multipath effects are defined as the refraction or reflection

of the satellite signal by electronic or physical interference. This reflection

of the signal distorts the time variable in the location equation yielding

incorrect data. The problem is that you will never be aware of data that

is distorted by multipath effects. While there is no cure, there are two

ways to deal with this problem. One is to adjust for multipath effects

through software enhancements; the other is to avoid the interference as

best you can. Recent releases in firmware have made advances in multipath

reduction. However, if you have an older unit, chances are that you do

not have this addition, which leaves the challenge of avoiding multipath

effects to the GPS'er .

Avoiding multipath effects is simple. If you are

surrounded by buildings, water towers, trees, large vehicles, large people

(no kidding), electromagnetic fields (power lines, generators, big motors),

the chance of having multipath effects degrading your data is great. Your

alternatives are to either take the position noting that there may be multipath

effects present, raise the GPS antenna or take an offset away from the

obstructions. I would suggest two things. First, use a data dictionary

or GPS log book to note the environment in which you are taking the position.

For instance, if you are downtown between buildings, under a water tower,

by a cliff face, or next to high voltage wires, these GPS environmental

variables should be noted. The GPS metadata allows users of the GPS information

to select and check for possible multipath errors.

My second suggestion would be to purchase a

large range pole. Most GPS antennas have a fairly long cable attached (approximately

15 feet) and extensions are available. For example, rather than have any

problems with multipath effects while trying to GPS the boundary of a fernery

under a heavy oak canopy, one GPS'er got a cable extension and a fifty

foot range pole. The job was completed in short order, and because the

antenna was over the tree canopy, errors were eliminated.

Of course, there is always FRED who will do as little

as possible. Multipath effects mean nothing to him, because the effects

do not slow him down one bit. There are no filters to catch errors, nor

are there any settings which could eliminate the problems. So, the only

efficient means of avoiding FRED's bad data is to be wary of data collected

in certain geographical location which may cause multipath errors. Besides

offsets and multipath effects, there are other collection procedures that

can effect the accuracy of the data. These include the antenna location,

position, and angle; the number of positions captured; the data processing;

the data entry; the data dictionaries, and so on. However, my intent with

this paper is to dispel the idea that GPS is easy, not to list every possible

problem there may be with GPS.

DATA PROCESSING:

The last area wherein errors can occur during the GPS

data collection process is in data processing. Trimble unit downloads raw

files that can only be read by Trimble software like Pfoffice. Pfoffice

software has capabilities to process these raw files by filtering and editing

the data, and offering multiple projection and format outputs. Unfortunately,

with more options comes an increase in the probability that the settings

could be changed, and in so doing, degrade the output data. Again if the

mistake is not caught, the data could be placed in a database, and the

error would be difficult to locate. Therefore, in this section, I will

show probable areas of trouble. I do not intend to go through every possible

setup option, as my intent with this paper is to relate the idea that GPS

is not easy and error free. However, the main problems arise in the processing

of the data, and using Trimble's Pfoffice batch processor program, I will

go through the major problem areas. The biggest area lies in the export

setup options. The export program includes setup criteria for format, position

filter, coordinate system, attributes, and units, all of which could effect

the positional accuracy of the output data.

Format:

The format option allows the GPS'er to select which

output format they wish to use. Format options include PC and UNIX ArcInfo

generate, Arcview shapefile, Dbase, and many more options. While this option

cannot degrade the locational accuracy of the data, it does add to the

aggravation if you receive files in the wrong format. For instance, if

your data dictionary has items with multiple word entries like "Las

Vegas Nevada", some formats will not place quotes around the attributes.

If you are trying to pull this data into a coverage, this could be a problem.

Or maybe you are expecting a shapefile and get a flat file instead. Like

I stated, this type of error is not critical (unless the original raw files

are deleted), but it is an annoyance.

Position Filter:

The position filter options are some of the most important

options offered in the software. These options allow the filtering of the

data to weed out some of FRED's misdeeds. However, if FRED is also doing

the processing, then you may have a problem. Note, these filters only work

if the option 'Log DOP Data' was selected on the TDC1. The filters

will check for the number of satellites used. Therefore, you can catch

whether or not FRED used the 'Manual 2D or Auto 2D/3D' setting on the TDC1

Position Mode option. Also, PDOP can be filtered. This option will not

allow any measurement to affect the averaged solution if that measurement

has a PDOP greater than that of the filter setting. And finally, the filter

options can filter for different types of GPS correction processes. For

example, you can filter out uncorrected, non-GPS, P(Y) code (military unscrambled),

differentially corrected, Real-time differential, phased processed, and

survey grade measurements. The position filter tool is one of the

best methods of fighting off bad data. If setup correctly, many of FRED's

tricks can be caught before the data is entered into a database or coverage.

Coordinate System:

I believe the importance of the coordinate system option

is self evident to any GIS tech, analyst, or database manager. This option

allows for the setup of the projection of your choice. The raw data is

converted into the prescribed projection. The software allows for the following

two types of export setups: an exclusive export setup and a current display

setup. I would suggest using the first option.

Pfoffice has a configuration setup menu in its home

menu page. This configuration is defaulted to the current display setup

in the export setup options. The problem is that the home menu page is

too easily accessible. Therefore, any user of the software may change the

projection for their needs. Now the current display setup has changed along

with the home menu configuration setup.

However, with the export setup programmed to the

needed projection, the bad user will have to enter the batch processor

to change the export setup. This setup change is not as easy as the first

and less likely to occur. This is why the export setup is preferred. I

do not have to tell you what could happen when you combine location information

with two different projections. Needless to say, the data will be locational

impaired.

Units:

Not much attention has been given to units, but I must

get on my soapbox at this point. If your intention is to publish the locations

in a database or in a paper, I beseech you not to have any more than one

significant digit. However, if you are going to generate a coverage, I

would use the most available significant digits. To understand my grievance,

allow me to give you an example.

In Florida, per second longitude and latitude differences

are approximately 89 and 90 ft respectively. If we round up to an even

100 feet, then one decimal place is 10 feet; two decimal places equate

to 1 foot. Only a survey grade GPS unit has the ability to meet sub-meter

accuracy. If you are using a Pro XL, or XR, then the most you can hope

for will be an accuracy of 1-3 meters or one significant digit. Therefore,

if you are publishing locational data, use only one decimal place if any.

However, if you are generating a coverage, go for

as many decimal places as you can. This will decrease the amount of rounding

errors which may be encountered in any location calculations during the

coverage generation process, and assure that the integrity of the 1-3 meter

GPS accuracy has been maintained.

Attributes:

The attribute options will not effect your locational

accuracy if you chose nothing. However, Pfoffice offers a good selection

of troubleshooting attributes that can help in determining bad data. These

options are maximum PDOP, correction type, receiver type, data file name,

time recorded, data recorded, position count (unfiltered), position count

(filtered), standard deviation, and for post-processed data a confidence

interval is available.

In a database, these sources of information

can help determine why locations are corrupt and aid in database management.

For instance, if a location is suspect, the database manager need only

look at the two position counts to see if there were measurements filtered

out of the averaged solution. Then a quick look at the PDOP, and standard

deviation will tell if there was a good cluster of measurements. Finally

the correction type can tell if this was post-processed, real-time, or

another type of correction. The manager can finally use the time and date

to find the original raw data file to look at the original measurements.

I strongly encourage the use of these attributes.

They are a great way to battle the FREDDIES of the GPS world, and they

enhance the reliability of the data in your charge.

AVOIDING GPS PITFALLS:

I have already mentioned some avenues of avoiding the

pitfalls of GPS data; however, I hope to offer you a useful arsenal. Most

of the ideas are common sense, and regrettably, FRED can circumvent all

of them. But to be honest there is not cure for FRED, except to get rid

of him. My arsenal include: configuration checklists for software and GPS

units, rigorous training and certification process, automated data processing,

security, visual inspection and acceptance of geo-referenced GPS information

by the GPS'er, and finally a single database manager.

Configuration Checklist:

A checklist is an easy way of getting everyone on the

same track. Make a list of all the possible settings on the GPS unit and

its related software. Set up a standard for your organization, department,

or division. Have this checklist available to each person who uses the

equipment. Encourage its use by periodically changing the settings on the

GPS unit and the software to insure compliance. By using a checklist, each

GPS should be set for optimum performance, and this is one step in the

right direction.

Training and Certification:

Training and certification are paramount to a successful

GPS data collection process. However, do not get lulled into thinking that

you can send a few to Trimble's certification class for a week and they

will come back knowing what they are doing. The training to which I am

referring, is a series of training sessions, with both a written and a

practical test.

For example, a training program which I put together

for St. Johns River Water Management District (SJRWMD) in Florida consisted

of a Trimble certification (1 week), a departmental GPS certification (1

week), a month of on the job training, a written test (85 to pass), a field

practical, and an intern/mentor period. These gateways are to be passed

before the employee can enter data into the database. Data sent by new

GPS'er is closely monitored. Still with all these precautions, we had a

few GPS'ers who just never got the knack of GPS'ing. I relate this experience

because this is a good example of a rigorous training regimen that still

had participants who passed through the gateways, but who were not good

GPS'ers. There are even some FREDDIES in the lot. This might give you an

idea of the difficulty and need for proper training.

However, I encourage you to teach your crew more

than they really need to know. If I can be so black and white, there are

two types of GPS'ers: the foxes and the lemmings. Don't worry about the

lemmings. They will only do what you tell them to do. Worry about the foxes.

The foxes are crafty and will root out things you had never dreamed they

would find. So if you show them how things work, they will be less likely

to mess with them later.

Automated Data Processing:

Another avenue to restrict the entrance of bad data,

is to remove most of the processing variables out of the hands of the people,

and into automation. By automating a majority of the process, you limit

the amount of software configuration errors which can cause problems. You

remove FRED's ability to turn off the filters which screen the data, and

you remove any transcription errors. The automation process is not an easy

endeavor. But once in place, it saves time and effort, and it increases

the integrity of the database.

Secondly, when processing is automated, it forces

regimentation in the GPS data collection in the field. Data dictionaries

must be set up exactly, offset procedures must be addressed, data entry

criteria must be established, and an overall data collection QA/QC dogma

can be set for all the GPS'ers in the project. Again this will establish

teamwork and dedication in the GPS'ers and the database manager. With automation

FRED will have a hard time slacking off. Not only will the automation remove

much of his tampering, but also others in the program are likely to address

FRED's lack of commitment.

Security:

Automation does one more thing; it offers the opportunity

to add security to the database. With automation, passwords can be added

to any program. Passwords will limit those who can add GPS data. Any effort

to collect data should consider some type of security. This way the data

is secure, and a sense of integrity can be kept.

Along with password protection, another form of security

is to append the GPS'er's name to each feature they collected. This give

a sense of responsibility and ownership to the GPS'er for the data. With

this type of security, two things are accomplished. One being that the

database's integrity is kept high. And secondly, the GPS'ers have a greater

dedication. Given this dedication, FRED hasn't a chance. For instance,

with our system, I regularly get complaints from one of my crew member

about another crew member's GPS practices or about the data he collected.

I find this dedication indispensable for finding errors and weeding out

the FREDDIES in the group.

Visual Inspection:

One more addition which automation can make easy is

the visual verification of the GPS data in a GIS. The most powerful tool

is to see the final data in a spatial reference with roads and hydrography

to use a reference. Also important is to have the individual who GPS'ed

the features visually check to see if the features are in the correct location.

It does no good for a GIS analyst to look at the data if the analyst was

not at the GPS site. The analyst knows nothing of the site, nor where the

feature should be. Logic dictates that the data gatherer should verify

that the data processed is in the correct location on screen or on a map.

And if the data is incorrect for any reason, the data should not be allowed

into the database until it has been corrected.

This process need not be truly automated. Simply

pulling the data from shapefiles into Arcview can serve the same purpose.

However, I stress that without this step, the bad data will most certainly

find its way into the database. For a case in point, let me again refer

to the application used by SJRWMD. Many GPS data errors have been found

using visual verification. In one instance, a GPS'er collected data all

day. Unfortunately, he did not check his TDC1 configuration, for someone

changed the PDOP setting to 12. Therefore, he gathered information with

too high a PDOP. The automated filters, however, removed the bad data.

When he tried to verify the locations of the sites visually, he noticed

that over half his data was missing. The filters did the job, and the visual

verification proved it. Also the GPS'er learned a valuable lesson.

Database Manager:

One last tool in the arsenal against bad GPS data is

a single database manager. When one person is responsible for a database,

errors are greatly minimized. Also the database manager is a central contact

person for each of the GPS'ers who is knowledgeable about the data and

can aid in troubleshooting problems. I stress, however, that the database

manager be more knowledgeable about the GPS technology than those collecting

the data. Experience has shown that the database manager is inundated with

GPS related questions by users. As the custodian of the data, the manager

must know how to address GPS related problems. If the manager's knowledge

is sparse, errors can go unnoticed.

CONCLUSION:

While I hope I have impressed upon you the fact that

GPS is not easy, I also hope that I have not dissuade you from using this

technology. While some would see the fallacies and rule against using GPS,

I see GPS as a tool which one must learn to use properly. Knowing how GPS

operates, understanding the need for equipment and software configuration

checklists, realizing the importance of proper training, conceptualizing

the power of automation to facilitate security and data verification, and

understanding the role of a database manager, can help to eliminate many

GPS related problems.

Also, I have offered insight to possible places where

errors can unintentionally and intentionally occur. Armed with this knowledge,

I hope I have prepared you to look more carefully at GPS data. Do not take

data which seems to be GPS verified for granted. Ask serious questions

about how the data was collected. Ask what the TDC1 configurations were.

Ask for the original raw data. If the data supplier cannot answer your

questions, then it may be wise not to accept the data.

Remember that time is necessary to fully understand

any technology. With proper training, practice, and long term exposure

to GPS, you will get the knack, and you will not have to throw out your

data. However, your shrimp tortellini may be another matter. I think you

used a bit too much garlic.

REFERENCES:

French, Gregory T., "Understanding the GPS; an Introduction to the Global

Positioning System; What It Is and How It Works." GeoReseach, Inc. 1996.

Hofmann-Wellenhof, B., Lichtenegger, and Collins, "GPS Theory and Practice."

Springer-Verlag Wien, New York. 2nd. ed., 1993.

Leick, Alfred, "GPS Satellite Surveying." Department of Surveying Engineering,

University of Maine. John Wiley & Sons. 1990.

Shrestha, Ramesh L. PhD., Bon Dewitt, Matt Wilson, "Applications of

GPS in GIS." Surveying and Mapping, University of Florida, July 1993.

Trimble Navigation Inc., "Training Manual: Pro XL System with Pfinder

Software." Part Number: 30990-00. Revision: A. June 1996.

Trimble Navigation Inc., "Mapping Systems; General Reference." Part

Number: 24177-00. Revision: B. April 1996.

Trimble Navigation Inc., "Pathfinder Office Software." Vol I - III.

Part Number: 31310-00, 31311-00, 31312-00. Revision: A. October 1996.

Wells, David, "Guide to GPS Positioning." Canadian GPS Association,

1987.

W. Francis Mizell

GIS Analyst

Consultant

112 E. Oak Hill Dr.

Palatka, Fl. 32177

(904) 325-5819

Email: fram@bellsouth.net